Python script (2024): Learn how to manage secure storage in AWS using S3 lifecycle configurations.

Quick overview of how to set up and run a .py program within Visual Studio Code using a virtual environment.

This was made in Visual Studio Code within a virtual environment.

The most important commands in working with your virtual environment are:

1. Create your virtual environment

> py -m venv env

2. Activate your virtual environment

> env\Scripts\activate

** It's also a good idea to update pip using the following command:

> py -m pip install --upgrade pip

3. Go to View > select Command Palette > Select Python Interpreter > (env:venv)

4. Ensure you are using the PowerShell terminal or you may do a split terminal if you need to run a few Linux commands (WSL) as part of the project. A split terminal is not necessary for this project.

5. Run your application

> py main.py

=====================================================

Screenshot of output

=====================================================

Next, import the correct libraries

boto3 is the Amazon Web Services SDK (software development kit) for Python. boto3 allows Python developers to interact with AWS services programmatically.

For this script, boto3 is being used to create and manage S3 buckets, upload files, and set bucket policies.

from botocore.exceptions import NoCredentialsError is used to handle specific exceptions related to missing AWS credentials. Useful if/when AWS credentials are not available or incorrectly configured.

import json is part of the Python standard library. The json module provides functions for parsing JSON strings and converting Python objects to JSON strings. For this project, json is used to handle the bucket policy by converting a Python dictionary into a JSON string.

import logging is also part of the Python standard library.

The logging module provides a flexible framework to record informational messages and errors.

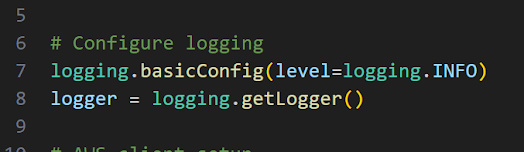

For the following lines, to configure logging:

7 logging.basicConfig(level=logging.INFO)

Configures the logging to capture and display log messages at the INFO level and above (INFO, WARNING, ERROR, and CRITICAL). This means any log message with a severity level of INFO or higher shall be sent to output. Messages with lower severity levels (like DEBUG) shall be ignored.

8 logger = logging.getLogger()

The logger object is used to log messages throughout the script. By calling methods like 'logger.info()', 'logger.error()', you may output log messages with different severity levels.

11 s3 = boto3.client('s3')

This line of code initialises a client to communicate with the Amazon S3 service using boto3 library.

The client returns a client object which provides methods to interact with the S3 service, such as creating buckets, uploading files, and setting bucket policies.

14 BUCKET_NAME = '2024-three-mai-bash-project'

This line of code describes the name of the S3 bucket which the script shall interact with.

15 FILE_NAME = 'test.txt'

This constant defines the name of the local file which shall be uploaded to the S3 bucket.

16 ENCRYPTED_FILE_NAME = 'encrypted_test.txt'

Provides the name of the file as it shall appear in the S3 bucket after being uploaded with encryption.

17 KMS_KEY_ID = '<key ID>'

This constant holds the AWS KMS (AWS Key Management Service) key identifier which shall be used to encrypt the file (server side encryption) when uploading the file to the S3 bucket.

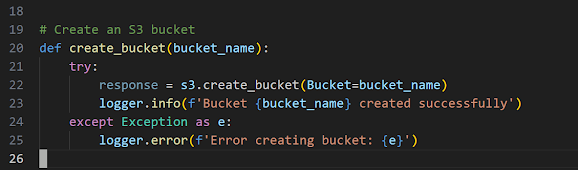

20 def create_bucket(bucket_name):

This line describes the name of the function, which takes one parametre 'bucket_name'. The purpose of this function is to create an S3 bucket with the name provided in the 'bucket_name' parametre.

21- 22 try:

The 'try' code block attempts to create the S3 bucket.

23 logger.info(f'Bucket {bucket_name} created successfully.')

If the bucket creation is successful, this line outputs an informational message indicating the bucket was created.

24 except Exception as e:

The 'except' block catches all exceptions which might be raised in the 'try' block and assigns the exception object to the variable 'e'.

25 logger.error( f ' Error creating bucket: {e} ' )

If an error occurs, this line outputs an error message with the exception details. The formatted f string inserts the exception message into the error log.

The following code block is explained for

28 function def upload_encrypted_file(bucket_name, file_name, encrypted_file_name, kms_key_id):

The function uploads a file to an S3 bucket with server-side encryption using AWS Key Management Service (KMS).

29 The try code block uploads the file and handles any exceptions which may occur during the process.

31 with open(file_name, 'rb') as data:

The context manager ('with') ensures that the file is properly closed after the code block is executed, even if an error occurs.

Opens the file in binary read mode ('rb').

'data' - The file object which may be read and uploaded.

Uploading the file (lines 32-38):

Line 32 uses the 'put_object' method of the S3 client to upload the file to the specified S3 bucket with server-side encryption.

32 s3.put_object(

33 Bucket=bucket_name,

Line 34 is the name of the file to be placed in the S3 bucket.

34 Key=encrypted_file_name,

Line 35 is the content of the file to be uploaded (the 'data' file object).

35 Body=data,

Line 36 specifies the use of server side encryption with AWS KMS (AWS Key Management System).

36 ServerSideEncryption='aws:kms',

Line 37 is the KMD Key ID or alias to use for encryption.

37 SSEKMSKeyId=kms_key_id

38 )

e), to indicate what went wrong.Version": "2012-10-17""Effect": "Deny""Principal": "*""Action": "s3:*""Resource": f"arn:aws:s3:::{bucket_name}/*""Condition""aws:SecureTransport": "false"put_bucket_policy method (line 69).json.dumps(), which serializes a Python object to a JSON formatted string.

Comments

Post a Comment